Research

Virtual Reality

SliVR: A 360° VR-Hub for Fast Selections in Multiple Virtual Environments

We present a VR hub designed to enhance multitasking capabilities by arranging multiple VEs in a regular polygon formation around the user. Each VE is visually represented by life-sized 2D previews that facilitate hub-based interactions.

IEEE ISMAR '25 — Conference Paper

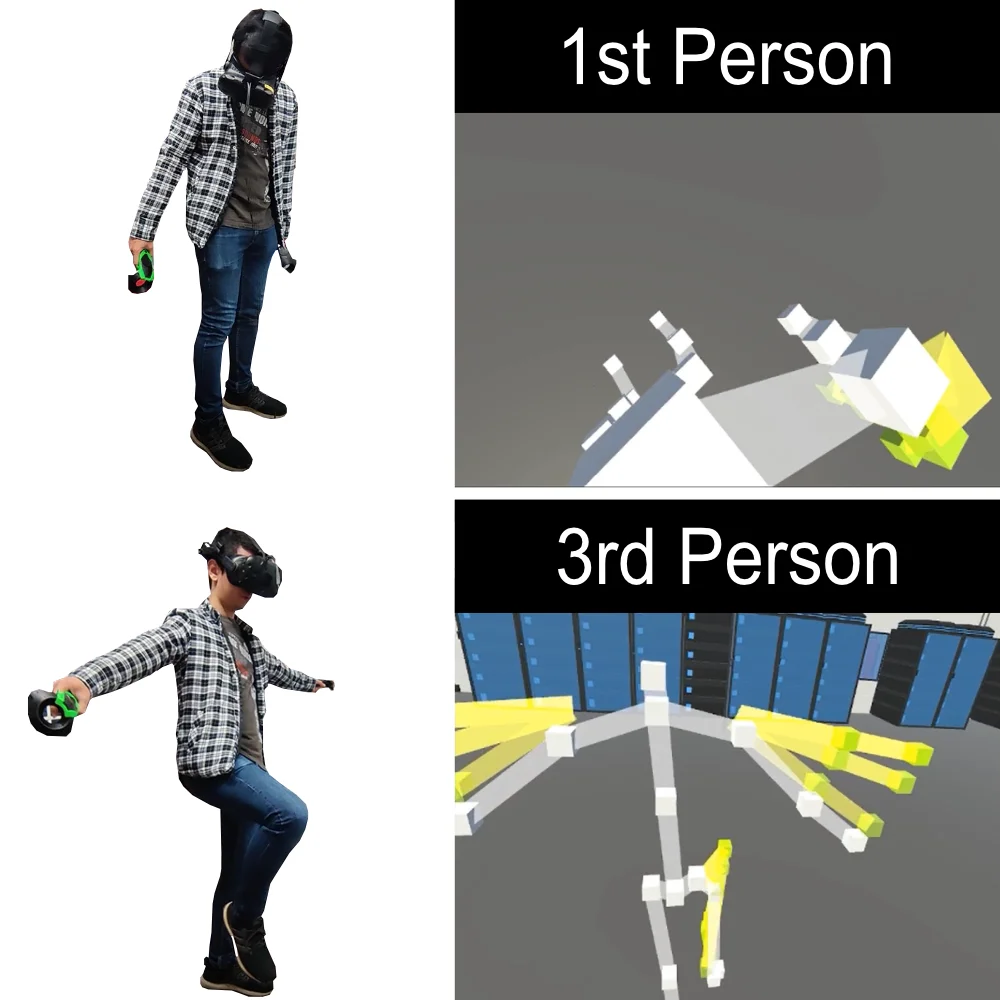

Understanding Perspectives for Single- and Multi-Limb Movement Guidance

Movement guidance in virtual reality has many applications ranging from physical therapy, assistive systems to sport learning. In a user study, we investigated the influence of perspective, feedback, and movement properties on the accuracy of movement guidance.

ACM VRST '22 — Full Paper

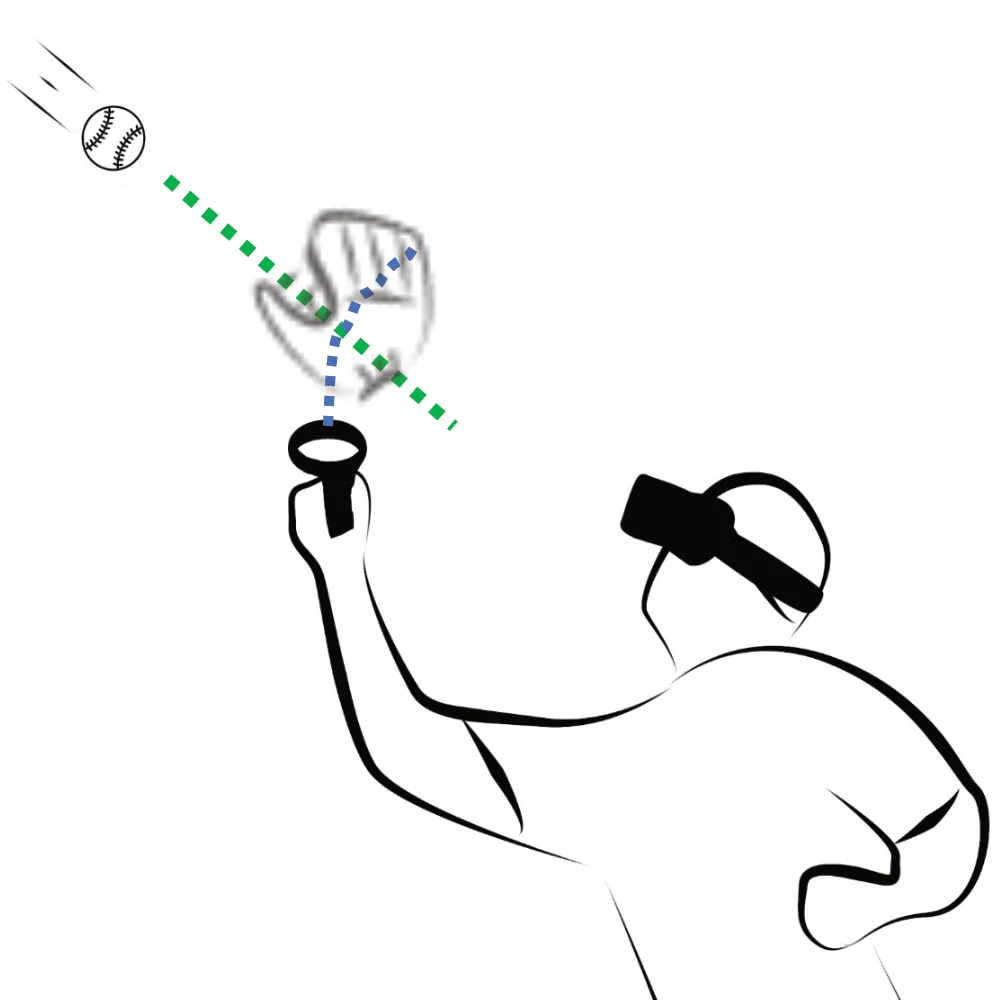

So Predictable! Continuous 3D Hand Trajectory Prediction in Virtual Reality

We contribute a novel user and activity independent kinematics-based regressive model for continuous 3D hand trajectory prediction in VR. This enables an early estimation of future events such as collisions between the user’s hand and virtual objects or interactions with UI widgets.

ACM UIST '21 — Full Paper

VRSketchPen: Unconstrained Haptic Assistance for Sketching in VR

Accurate sketching in virtual 3D environments is challenging. We developed VRSketchPen, which uses two haptic modalities: pneumatic force feedback to simulate the contact pressure of the pen against virtual surfaces and vibrotactile feedback to mimic textures while moving the pen over virtual surfaces.

ACM VRST '20 — Full PaperHaptic Feedback

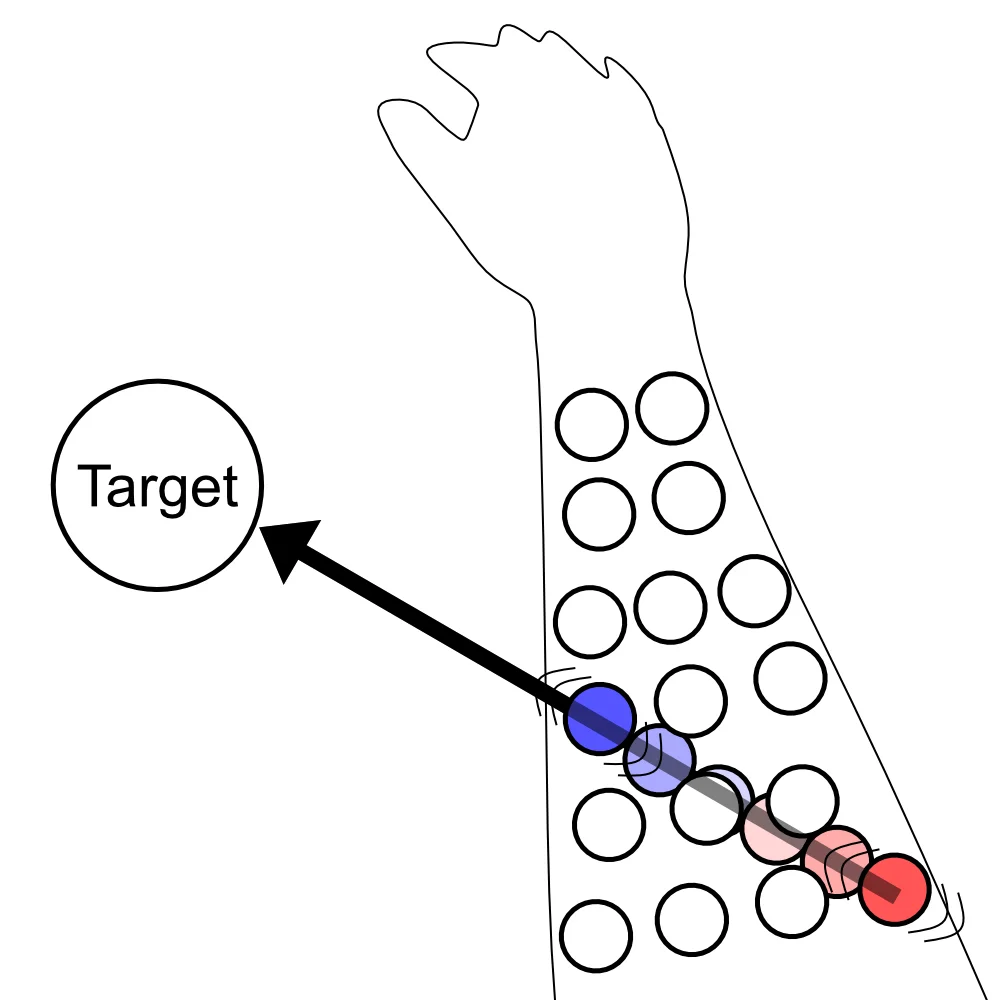

Tactile Vectors for Omnidirectional Arm Guidance

We introduce and study two omnidirectional movement guidance techniques that use two vibrotactile actuators to convey a movement direction. The first vibrotactile actuator defines the starting point and the second actuator communicates the endpoint of the direction vector.

Augmented Humans '23 — Full Paper

VibroMap: Understanding the Spacing of Vibrotactile Actuators

We provide an understanding of the spacing between vibrotactile actuators. Through two experiments, we systematically investigate vibrotactile perception on the wrist, forearm, upper arm, back, torso, thigh, and leg, each in transverse and longitudinal body orientation.

PACM IMWUT '20 — Journal Article

Visuo-tactile AR for Enhanced Safety Awareness in HRI

We describe our approach for developing a multimodal AR-system that combines visual and tactile cues in order to enhance the safety-awareness of humans in human-robot interaction tasks.

Workshop Paper at HRI 2020Robots and Drones

Meaningful Telerobots in Informal Care

While telerobots offer potentially unique ways to shape human-human relationships, current concepts often imitate existing practices. Using the example of informal care, we explored whether the use of the unique possibilities provided by telerobots can lead to meaningful extended or unique care practices.

ACM NordiCHI '22 — Full Paper

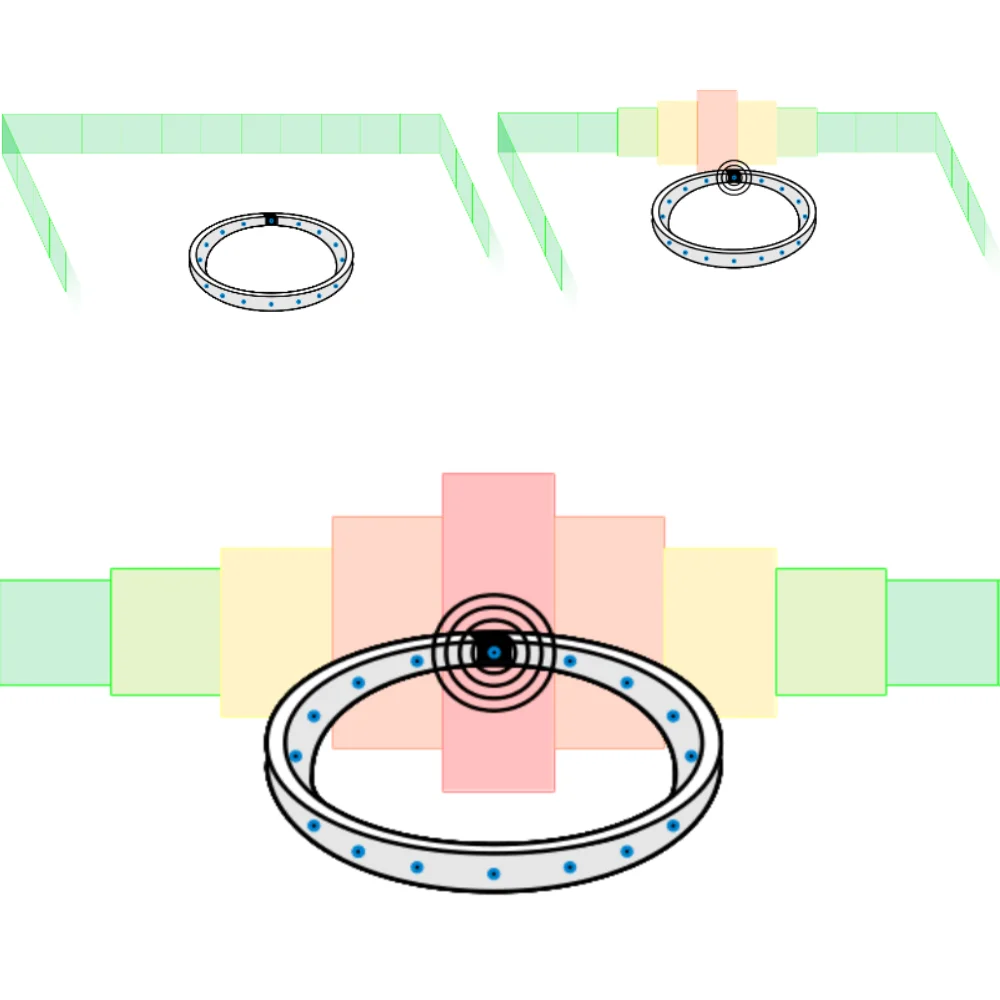

Understanding Drone Landing on the Human Body

We envision the human body as a platform for fast take-off and landing of drones in entertainment and professional uses. This work investigates the suitability of various body locations for landing in an online study and further tested these findings in a follow-up VR evaluation.

ACM MobileHCI '21 — Full PaperInteractive Skin

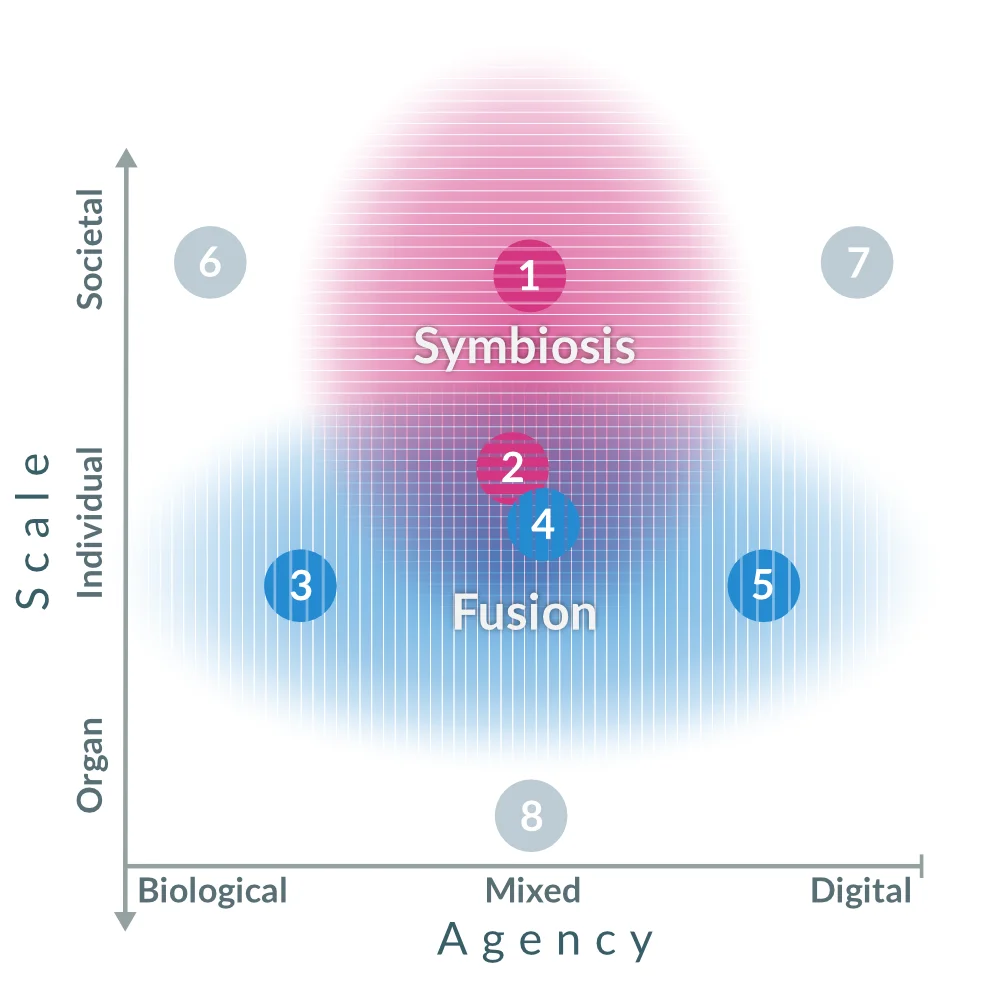

Next Steps in Human-Computer Integration

Human-Computer Integration (HInt) is an emerging paradigm in which computational and human systems are closely interwoven. We present a set of challenges for HInt research, formulated over the course of a five-day workshop consisting of 29 experts.

ACM CHI '20 — Full Paper

DeformWear: Deformation Input on Tiny Wearable Devices

We introduce DeformWear, tiny wearable devices that leverage single-point deformation input on various body locations. Despite the small input surface, DeformWear enables expressive and precise input using high-resolution pressure, shear, and pinch deformations.

PACM IMWUT '17 — Journal Article

SkinMarks: Enabling Interactions on Body Landmarks

SkinMarks are novel skin-worn I/O devices for precisely localized input and output on fine body landmarks. SkinMarks comprise skin electronics on temporary rub-on tattoos. They conform to fine wrinkles and are compatible with strongly curved and elastic body locations.

ACM CHI '17 — Full Paper

iSkin: Stretchable On-Body Touch Sensors

iSkin is a novel class of skin-worn sensors for touch input on the body. It is a very thin sensor overlay, made of biocompatible materials, and is flexible and stretchable. It can be produced in different shapes and sizes to suit various locations of the body such as the finger, forearm, or ear.

ACM CHI '15 — Full Paper, Best Paper Award (top 1%)

Understanding How People Use Skin as an Input Surface

Skin is fundamentally different from off-body touch surfaces. In an empirical study we investigate characteristics of the various skin-specific input modalities, analyze what kinds of gestures are performed on skin, and study what are preferred input locations.

ACM CHI '14 — Full PaperVisualizations

CameraReady: Display Types and Visualizations for Posture Guidance

Computer-supported posture guidance is used in sports, dance training, expression of art with movements, and learning gestures for interaction. We compared five display types with different screen sizes. On each device, we compared three common visualizations.

ACM DIS '21 — Full Paper

ProjectorKit: Easing Rapid Prototyping of Mobile Projections

Researchers have developed interaction concepts based on mobile projectors. Yet pursuing work in this area is cumbersome and time-consuming. To mitigate this problem, we contribute ProjectorKit, a flexible open-source toolkit that eases rapid prototyping mobile projector interaction techniques.

ACM MobileHCI '13 — Short Paper

Combining Mobile Projectors and Stationary Displays

Focus plus context displays combine high-resolution detail and lower-resolution overview using displays of different pixel densities. In this paper, we explore focus plus context displays using one or more mobile projectors in combination with a stationary display.

GRAND '13 — Research Note, Honorable Mention Paper AwardCheck out some more research or Prof. Weigel's prior Master and Bachelor projects.